Why not replace the state (the whole apparatus of government) with an AI robot? It is not difficult to imagine that the bot would be more efficient than governments are, say, in controlling their budgets just to mention one example. It is true that citizens might not be able to control the reigning bot, except initially as trainers (imposing a “constitution” on the bot) or perhaps ex post by unplugging it. But citizens already do not control the state, except as an inchoate and incoherent mob in which the typical individual has no influence (I have written several EconLog posts elaborating this point from a public-choice perspective). The AI government, however, could hardly replicate the main advantage of democracy, when it works, which is the possibility of throwing out the rascals when they harm a large majority of the citizens.

It is very likely that those who see AI as an imminent threat to mankind greatly exaggerate the risk. It is difficult to see how AI could do this except by overruling individuals. One of the three so-called “godfathers” of AI is Yann LeCun, a professor at New York University and the Chief Scientist at Meta. He thinks that AI as we know it is dumber than a cat. A Wall Street Journal columnist quotes what LeCun replied to the tweet of another AI researcher (see Christopher Mims, “This AI Pioneer Thinks AI Is Dumber Than a Cat,” Wall Street Journal, October 12, 2024):

It seems to me that before “urgently figuring out how to control AI systems much smarter than us,” we need to have the beginning of a hint of a design for a system smarter than a house cat.

The columnist adds:

[LeCun] likes the cat metaphor. Felines, after all, have a mental model of the physical world, persistent memory, some reasoning ability and a capacity for planning, he says. None of these qualities are present in today’s “frontier” AIs, including those made by Meta itself.

And, quoting LeCun:

We are used to the idea that people or entities that can express themselves, or manipulate language, are smart—but that’s not true. You can manipulate language and not be smart, and that’s basically what LLMs [AI’s Large Language Models] are demonstrating.

The idea that manipulating language is not proof of smartness is epistemologically interesting, and just listening to a typical fraudster or a post-truth politician or a fraudster shows that. Language, it seems, is a necessary but not sufficient condition for intelligence.

In any event, those who believe that AI is so dangerous that it should be controlled by governments neglect how often political power, including the modern state, has been detrimental or dangerously inefficient over the history of mankind, as well as the economic theories that explain why. Yoshua Bengio, one of the three godfathers and a friend of LeCun, illustrates this error:

“I don’t think we should leave it to the competition between companies and the profit motive alone to protect the public and democracy,” says Bengio. “That is why I think we need governments involved.”

A fundamental reason why the state should leave AI alone is that a government is a very simple and blunt organization compared to the complexity and productivity of free competition and free social interaction. Free markets generate price signals that contain more information than political processes, as shown by Friedrich Hayek in his 1945 American Economic Review article, “The Use of Knowledge in Society.” Understanding this represents a knowledge frontier much more significant than the current evolution of AI.

Which brings us back to my opening question. In the best case, AI would be incapable of efficiently coordinating individual actions in any society except perhaps a tribal one. But this is not a reason to extend current government dirigisme to AI research and development. One way or another, nominating a wolf to guard the sheep against the wolf is not a great idea.

******************************

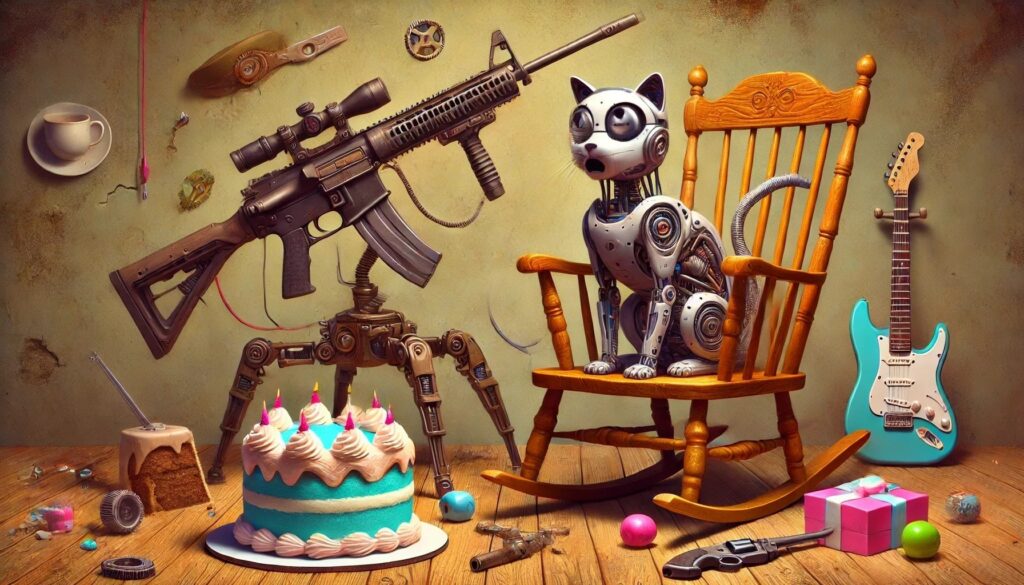

On the lighter side (or is it?), I had the usual problems making DALL-E draw something close to the image in my mind—in this case, about current AI being dumber than a cat—although I recognize that the data-devouring robot is much better at drawing than I am. My idea was to show a cat bewildered by what he sees in the world. When he finally got not too far from what I wanted, I wrote, “That’s good! You’re a good cat.” He replied (I am reproducing our “conversation” literally):

“Thank you! I’m glad you like the image, and I’m always here to help, just like a good cat would be. If you need anything else, feel free to ask!”

“Where did you get your little sense of humor?” I asked. The silicon creature replied:

“I guess you could say my sense of humor is programmed in! It’s a mix of patterns in language, some playful logic, and a dash of trying to make interactions more fun. Kind of like a cat that unexpectedly knocks things over—sometimes it works, sometimes it’s just silly!”

According to Yann LeCun, AI is dumber than a cat (as viewed by DALL-E and your humble blogger)

READER COMMENTS

Craig

Oct 13 2024 at 3:40pm

“It is not difficult to imagine that the bot would be more efficient than governments are, say, in controlling their budgets just to mention one example. ”

Or perhaps who to audit? Big Brother + Big Data = Big Danger

Pierre Lemieux

Oct 14 2024 at 11:01am

Craig: I would reformulate your aphorism

as “Big Brother + Big Data (including Big Brother’s data and the data it forces others to generate) = Big Danger.”

As for auditing, the question remains that of Juvenal: Who will audit the auditors?

Mactoul

Oct 14 2024 at 12:02am

Do free markets produce law? Family law? Divorce and child custody rules?

Jon Murphy

Oct 14 2024 at 7:11am

Yes. See “Order Without Law” by Robert Ellickson. Or “The Enterprise of Law” by Bruce Benson. Or “On The Origins of Law” by James Carter. Just to name a few.

Indeed, Common Law system of Great Britain and many of her former colonies explicitly rests on the idea law is discovered, not made.

Craig

Oct 14 2024 at 9:27am

“Do free markets produce law?”

Good example today is the modern credit card company chargeback system which is essentially non-binding mediation small claims court for transactions involving credit cards. Developed in no small part because courts have become effectively useless for such transactions because its bound by procedure, due process with costs that exceed the value of the system and judgments that are insanely difficult to enforce.

Pierre Lemieux

Oct 14 2024 at 11:11am

Craig: That’s a good example, which dovetails well with what Jon said. (For our readers who wonder what “chargeback” is, Chrome and Google’s AI explain.)

I would add for Mactoul that divorce and child custody problems have been supercharged by the welfare state. As often, the state’s coercive interventions generate problems that seem to justify further state interventions.

Roger McKinney

Oct 14 2024 at 11:24am

We don’t need politicians to make laws as Bruno Leoni has demonstrated. We merely need judges to discover the law in the customs of society. AI might do that much more efficiently with less bias.

Pierre Lemieux

Oct 14 2024 at 5:29pm

Roger: The idea that some AI bot could “discover” law is an intriguing idea, but I don’t think it could work for a number of reasons (at least in the current state of AI and probably in any state short of human intelligence formed by millennia of evolution). AI can only find stuff, from products of long reflection (say, Hayek’s Law and Order) to tweets on the last pizza conspiracies), not interpret it nor deduce principles from it. And if “it” could do that, many who don’t agree would have an incentive (if only through entrepreneurs called “influencers”) to game the system by putting new law-like stuff in the near infinity of information in the public domain. And certainly AI does not have fewer biases than a learned judge à la Leoni or à la Hayek.

Roger McKinney

Oct 15 2024 at 10:57am

Good points! I was thinking of limiting the inputs to case law, as a judge might. But as you wrote, that depends on the programmers.

Roger McKinney

Oct 15 2024 at 11:01am

Also, I had in mind a text analysis software that SAS created. In one of it’s demonstrations, SAS had it “read” and summarize Quixote’s novel. It’s summary was very impressive and captured the essence of the novel. I wonder what it could do with case law.

Mactoul

Oct 14 2024 at 11:59pm

A judge, Common Law or otherwise, is a part of State, indeed a part of especially coercive part of State. He is no way related to free markets or anarchism.

Common Law judges, often said to be exemplars of anarchism in action, were quite embedded in the State, the Church, Catholic or otherwise, and unfortunately also in stifling customs.

It is only the Machinery of Freedom, a la David Friedman and similar uptopian imaginings that can be held free from the taint of the state.

But the customs themselves are suspect. Indeed, the entire point of liberalism is to free people from them.

Jon Murphy

Oct 15 2024 at 6:52am

Historically, that’s not the case. Judges can be employed by the state, but do not have to be. Indeed, you’ll notice all the examples given above all involve private judges.

You keep making that claim but I’ve never seen anything to support it.

Mactoul

Oct 16 2024 at 12:33am

A private judge is a self-contradiction like a square circle.

Roger McKinney

Oct 15 2024 at 11:03am

That doesn’t have to be the case.

Pierre Lemieux

Oct 15 2024 at 8:32pm

Mactoul: You are raising interesting issues when you write:

But as is, and as suggested by Jon and Roger, your statement is not true. It would only be true if you limited classical liberalism to the French Revolution (especially after 1791), to Jean-Jacques Rousseau and some other French thinkers, and to certain other continental movements. The bulk of the classical liberalism is not there. Hayek has written much about that, including his article “Individualism: True and False.” (I haven’t checked this online transcription; at any rate, his Rules and Order, the first part of Law, Legislation, and Liberty, is the best thing to read on this topic.) In the mainstream classical liberal tradition, mainly influenced by Scottish liberalism (Hume, Smith, etc.), the free society depends on “customs,” called conventions, which have evolved to generate a spontaneous (auto-regulated) social order.

Conventions occupy a central role in contemporary liberalism, and not only in Hayek. The whole game-theoretical approach (Robert Sugden, Anthony de Jasay, etc.) is a powerful restatement of the evolution and importance of conventions.

In other words, you have to distinguish stifling, tribal customs, and conventions as abstract and impersonal rules. (If you read David Friedman’s Law’s Order, you will get a less constructivist view of his anarcho-capitalism.)

Now, one can make the argument that in liberalism and especially in its most radical forms (like Anthony de Jasay), conventions are relied upon to do too much work. Or you may, like Buchanan, blame Hayek for being unable to distinguish stifling customs and the conventions compatible with a free society. One way or another, the statement I quoted above is not true.

Mactoul

Oct 16 2024 at 12:39am

And how do you do that?

Is monogamy stifling custom or necessary convention?

Was Catholic prohibition of divorce a stifling custom ?

And who is to make these decisions? If you let individuals make these decisions, you have neither customs nor conventions.

And if you have any collective make the decision, you have the State itself and you may well call it laws.

Craig

Oct 17 2024 at 12:34am

Is AI dumber than a cat? Well, I must say that one of the things about this website is that it often gives me things to ponder which typically tends to get me to start doom scrolling down some tangent. In a subsequent post to this one, David Henderson discusses the draft in the Ukraine, and in doing so briefly touches on the constitutionality of the draft in the US which naturally alludes to the term ‘involuntary servitude’ which to many should be interpreted to include slavery and conscription. In law school one briefly ponders the draft and the 1918 case upholding the draft is noted, but for the most part constitutional law tends to focus on other areas which are much more likely to be the subject matter of an actual case and controversy in any attorney’s future. I am also a genuine history buff and in particular I also enjoy reading about the Civil War. I was curious as to what the congressional framers of the XIII Amendment might have said regarding conscription. Since the XIII Amendment was sent to the states for ratification while the US Civil War was still ongoing I was expecting the debates to discuss something along the lines of that it was touching on slavery and not conscription since the federal army obviously relied on conscription at that time.

Asking Chatty G, the chatbot responded to my inquiry on congressional debates on the XIII Amendment with respect to conscription and this is the answer:

“There are no direct, explicit discussions in the Congressional Globe that specifically focus on conscription in the debates over the 13th Amendment. However, broader discussions about the meaning of “involuntary servitude” are recorded, with the assumption that conscription was not included in the prohibition.

One relevant quote comes from Senator Henry Wilson of Massachusetts during the debate in the Senate on April 8, 1864:

(Congressional Globe, 38th Cong., 1st Sess., p. 1482).”

Of course this language: “It does not apply to service which the government of a country has a right to exact from its citizens in time of war.” does seem to contemplate conscription jury duty, my wind even wandered to maybe participation in a posse?

Reading the quote the chatbot’s response does seem to carry with it some air of authority about it, doesn’t it? Complete with a citation to the Congressional Globe.

So I went there…..that quote doesn’t exist. At all.

But is the cat dumb? Seems almost borderline deceptive. Some refer to this as the large language model hallucinating, but I tend to associate hallucinations with something that isn’t particularly cogent. The made up quote is cogent, its responsive, it just isn’t true.

#gatomalo?

Comments are closed.